Sam Stephenson is the creator of the Prototype JavaScript framework and rbenv, the competitor to RVM. He recently wrote an interesting article why programmers are not their product named “you are not your code“. Are you?

Sam Stephenson is the creator of the Prototype JavaScript framework and rbenv, the competitor to RVM. He recently wrote an interesting article why programmers are not their product named “you are not your code“. Are you?

This is in fact what programmers do quite often: their identify themselves with their code. After all, they have written and created every line and every character. They have invented the names, the functions, and the structures. Nobody else knows their code as good as they do. They own their “precious” code. Programmers are like little gods who like to rule their own universe.

The advantage is obvious: if the software is succesful and you identify with it, it is your success. The drawback: if the software is not succesful and you identify with it, it is your failure. This is similar to a sports team: if a sports team wins, then everybody wants to take part in the success. If the team continues to lose, then everybody starts to blame each other: the president the trainer, the trainer the players, the players each other, etc.

It often works to claim the ownership of something because people have a lot of cognitive biases. One of these biases is the fundamental attribution error in Psychology: we have a tendency to over-emphasize personality-based explanations and ignore the role of other influences (for instance situational ones). We also tend to attribute great events to great men, know as great man theory.

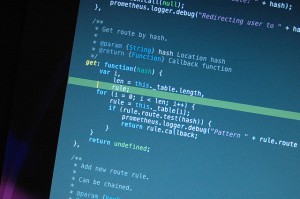

While it is debatable if this is a good thing or not, a developer of a modern web application can hardly claim he is the only author of it. In the early days of PCs, it was only the programmer and the CPU that mattered, at least if you did machine programming in assembly language directly. Then we had the first high-level programming language to program systems with disk-operating systems like CP/M or various forms of DOS. Together with graphical user interfaces object-oriented programming languages arrived, and for the web comfortable high-level languages like Java, Ruby or Python with garbage collection appeared. Today we have 4 or 5 layers between the programmer and the CPU: for example for Ruby programs the programs are written in Ruby, Ruby is written in C, C is written in Assembly, and Assembly boils down to machine code.

And this is only the language itself. A modern web application is like an iceberg, the stuff above the surface is written by you and your team, the stuff below by countless others. It is not only the language and the tools for editing and debugging, a web application is based on a lot of different servers and systems

- the operating system like MacOS or Linux

- the web server like Apache or Nginx

- the web server modules like Phusion Passenger

- the database server like MySQL or PostgreSQL

- the caching server like Memcached or Redis

- the mail server and mail transfer agents like Postfix or Sendmail

- the message queue processing server like ActiveMQ, RabbitMQ or ZeroMQ

Then there are also the languages and version management systems, frameworks and libraries,

gems and plugins, written by countless other developers:

- languages like C, Ruby, Python or Javascript

- version management systems like SVM, Git, RVM or rbenv

- frameworks like Rails or Django

- libraries like Prototype or jQuery

- gems and plugins for pagination, authentication, etc.

In order to build a modern application, you setup different servers and configure them, choose a language, a framework and suitable libraries, and finally you select different plugins and gems and stick them together in a unique way. If you have done all this you can hardly claim you have created the system. And yet we tend to do it..

Therefore if you are a Ruby developer and you have produced more than others, it is not because you are taller or smarter. It is probaby because you are standing on the shoulders of many others.

(The sourcecode photo is from Flickr user nyuhuhuu)